Encoding ISO 3166 in RDF with SKOS

29. Januar 2008 um 00:56 Keine KommentareLast year at the 2nd International Conference on Metadata and Semantics Research (MTSR 2007) I gave a talk about the Simple Knowledge Organisation System (SKOS) and its application to encode ISO 3166 country codes. The revised paper „Encoding changing country codes for the Semantic Web with ISO 3166 and SKOS“ is finally ready to appear in the post-proceedings. The preprint is accessible at http://arxiv.org/abs/0801.3908. I raised three issues (notations, nesting concept schemes, and versioning) that are not included in the current SKOS draft – but the proposed solutions are compliant, beside the nesting of concept schemes with the RDF property skos:member – which could be possible by making skos:ConceptScheme a RDF subclass of skos:Collection. Therefore the paper can also be used as a general introduction to SKOS, especially to encode authority files. More details in the paper and at the public-esw-thes@w3.org mailing list. SKOS is going to become a W3C recommendation this year.

Citation parsing

24. Januar 2008 um 19:09 6 KommentareCitation Analysis is used to rate authors (problematic) and to find interesting papers (good idea). Citations of papers at the famous arXiv.org preprint server are analysed by CiteBase which is very useful. Unluckily it is buggy and does not alway work. I really wonder why the full text of a paper is parsed instead of using the BibTeX source. The citation parser ParaCite has been developed in the Open Citation Project. Since then it seems to be more or less abandoned. But it’s open source so you can test you papers before uploading and one could take the suiting parts to build a better citation parser. I found out that this way you can extract citations out of a document in $file (for instance a pdf) with perl (the needed modules are available at CPAN):

my $parser = Biblio::Citation::Parser::Citebase->new;

my $content = Biblio::Document::Parser::Utils::get_content( $file );

my $doc_parser = Biblio::Document::Parser::Brody->new;

my @references = $doc_parser->parse($content);

for (my $i=0; $i < @references; $i++) {

my $metadata = $parser->parse( $references[$i] );

print '[' . ($i+1) . '] ' . Dumper( $metadata ) . "\n";

}

In the documented that I tested there are almost always parsing errors, but better then nothing. I wonder what CiteSeer uses to extract citations? There is more action in citation parsing in the Zotero project – even an IDE called Scaffold to create new „translators“ that extract bibliographic data out of webpages. Another playing ground is Wikipedia which contains a growing number of references. And of course there are the commericla citation indexes like SCI. I thought to use citation data for additional catalog enrichement (in addition to ISBN2Wikipedia) but quality of data seems to be too low and identifiers are missing.

P.S: Right after writing this, I found Alf Eaton’s experiment with collecting together the conversations around a paper from various academic, news, blog and other discussion channels – as soon as you have identifiers (ISBN, URL, DOI, PMID…) the world gets connected 🙂

P.P.S: ParsCit seems to be a good new reference string parsing package (open source, written in Perl).

P.P.S: Konstantin Baierer manages a bibliography on citation parsing for his parser Citation::Multi::Parser.

thingISBN is getting better – so does SeeAlso

17. Januar 2008 um 00:05 Keine KommentareTim Spalding points out that thingISBN is getting better [via FRBR blog] – a good reason to update the thingISBN data of our SeeAlso Linkserver isbn2librarything. It now includes 2994299 ISBNs, that’s almost 12 times the size of the isbn2wikipedia linkserver. But what’s a linkserver? A linkserver is a server that gets an identifier (for instance an ISBN) and gives you links (for instance a link to a work at LibraryThing). You can try out some SeeAlso linkservers at a demo page or start to implement your own.

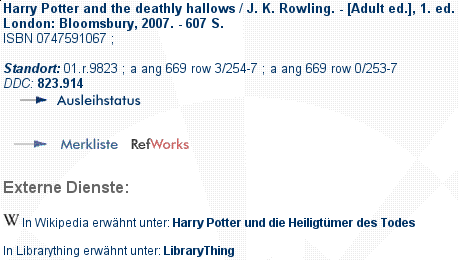

To get an impression, look in GBV union catalog, for instance this record: after a short moment, a link to German Wikipedia is included. More and more German libraries follow to include such additional links in their catalog. The Catalog of University Bremen includes both links to (German) Wikipedia and links to LibraryThing (you have to click at „weitere Informationen…“ at a selected record):

There is also an experimental ISBN2WorldCat linkserver. But linkservers that only send one or zero links are not very effective. I could combine multiple service to something like ISBN2SocialCataloging or such. Any suggestions for other services that libraries might not be frightened to link to? I’d also like to include more data in the link to LibraryThing (for instance the number of reviews) but unless German libraries wake up to ask for LibraryThing for Libraries I better not reimplement services that LibraryThing already offers.

The linkserver model could also be used to provide links to library holding and thus implement a FRBR-Manifestation to FRBR-Item mapping. As I just wrote at CODE4LIB and PER4LIB, a simple data format for holding information is needed to do so.

First Draft of SeeAlso Simple linkserver API

14. Januar 2008 um 23:45 2 KommentareI finally finished the first draft of the SeeAlso Simple Specification  . The webservice API for simple linkservers is based on OpenSearch Suggestions. It will be completed by the „SeeAlso Full Specification“ which adds unAPI and OpenSearch Description documents. The service is already implemented and running at the Union Catalog of GBV, see my earlier posting or simply have a look at this example. The same service is installed for testing at the Wikimedia Toolserver. For Wikipedia I drafted a different client that can be tested via a Bookmarklet: Drag the Link „ISBN2W“ to you bookmark toolbar, visit an English or German Wikipedia article with ISBNs on it, click the Bookmarklet link in your toolbar and hover the the ISBNs (that will become yellow) with the mouse. German Wikipedia users can also enable the service by adding a line of JavaScript to their user profile.

. The webservice API for simple linkservers is based on OpenSearch Suggestions. It will be completed by the „SeeAlso Full Specification“ which adds unAPI and OpenSearch Description documents. The service is already implemented and running at the Union Catalog of GBV, see my earlier posting or simply have a look at this example. The same service is installed for testing at the Wikimedia Toolserver. For Wikipedia I drafted a different client that can be tested via a Bookmarklet: Drag the Link „ISBN2W“ to you bookmark toolbar, visit an English or German Wikipedia article with ISBNs on it, click the Bookmarklet link in your toolbar and hover the the ISBNs (that will become yellow) with the mouse. German Wikipedia users can also enable the service by adding a line of JavaScript to their user profile.

An implementation (SeeAlso Simple and SeeAlso Full) in Perl is available at CPAN (there was neither unAPI nor OpenSearch Suggestions, so I implemented both). Please note that ISBN to Wikipedia is only one example how the API can be used. In my opinion the concept of a linkserver is highly undervalued but will become more important again (for instance as a lightweigth alternative to simple SPARQL queries). Feedback and usage is welcome!

First draft of OAI-ORE

30. Dezember 2007 um 18:06 Keine Kommentare„Web 3.0“ (or „Semantic Web“ – use the buzzword of your choice) is slowly on the raise. Two weeks ago the first public draft of OAI-ORE was published and Mike Giarlo published an OAI-ORE-Plugin for WordPress – I have not actually tried it, but as far as I understand one could add RFC 5005 to OAI-ORE to support large resource sets. Or is OAI-PMH enough? Well, in the end it depends on the availability of software libraries, client and the ease of connecting it with other services. After my fancy there are still too much generalized data models but we need concrete implementations – it was not RDF and OWL but Microformats that got the Web of data started (yes, we’re in it: the next hype after „Web 2.0“). For 2008 I wish less abstract meta-meta-meta-stuff but, more little usable applications and services that can be combined.

Google-Wikipedia-Connection and the decay of academia

10. Dezember 2007 um 02:49 6 KommentareMathias pointed [de] me to a lengthy and partly ridiculous „Report on dangers and opportunities posed by large search engines, particularly Google“ by Hermann Maurer [de] (professor at the IICM, Graz) and various co-authors, among them Stefan Weber, whose book [de], I already wrote about [de]. Weber is known as well as Debora Weber-Wulff for detecting plagiarism in academia – a growing problem with the rise of Google and Wikipedia as Weber points out. But in the current study he (and/or his colleauges) produced so much nonsense that I could not let it uncommented.

Beitrag Google-Wikipedia-Connection and the decay of academia weiterlesen…

Linking to Wikipedia via ISBN

5. Dezember 2007 um 19:52 2 KommentareTwo weeks ago I announced a new beta webservice for linking from title data to German Wikipedia articles that cite a given work by ISBN. Now Lambert pointed me to a message by Eric Hellmann about xISBN supporting links to the English Wikipedia since last week. This makes GBV one week earlier then OCLC and both of us ten month later then LibraryThing. The idea is not new but based on the work of Lars Aronsson, Spiritus rector of future library systems.

The three services have been developed partly parallel based on some same code. Here are two examples of the xISBN service by Xiaoming Liu and the GBV service that I am developing. xISBN is based on a powerfull API with many query parameters and planned to be part of WorldCat Grid Services (more about this maybe here, here, here, and here), while my service is based on a simple API called SeeAlso which is based on OpenSearch Suggestions and unAPI. Both approaches have their pros and cons. I will provide more information as soon as our server is more stable and the scripts are clean enough to get published at CPAN. Meanwhile I encourage everyone to play with OCLC’s xISBN (via an API of its own), WorldCat Idenities (via SRU), LibraryThing for Libraries (via an API of its own), available catalouges (via Z39.50, via SRU and via other APIs), the Wikipedia Query API, and the growing number of other services. Let’s mash up the library systems for the future!

Social Cataloging in Wikipedia

5. Dezember 2007 um 01:19 Keine KommentareLast week I gave an introduction into social tagging and cataloging for librarians (some German slides here at Slideshare). In a discussion on German Wikipedia about COinS I was pointed to the French Wikipedia: They have a special namespace référence to store more detailled bibliographic information (see MediaWiki-namespaces in general), some more information is collected in Projet:Références, but my French is too little to find out much. This example may demonstrate the concept:

The article „Première période intermédiaire égyptienne“ cites the source „Nicolas Grimal, Histoire de l’Égypte ancienne, 1988“. The citation provides a link to on a special page that lists several editions of the work. Actually this is another implementation of FRBR.

I like the idea of seggregating full bibliographic record and reference in the Wikipedia article, but the concrete solution is too complicated and limited. Wikipedia with flat text is just not the right tool to store bibliographic data. Maybe Semantic MediaWiki can help, but a multilingual approach like LibraryThing does is better. French Wikipedians should not have to duplicate cataloging efforts, but just point to LibraryThing, WorldCat or whatever bibliographic authority is usable. By the way most library catalouges are not usable in this sense – on the Web noone cares how good you data is if you cannot directly link to it and use it in other context.

Relevant APIs for (digital) libraries

30. November 2007 um 14:50 5 KommentareMy current impression of OCLC/WorldCat Service Grid is still far to abstract – instead of creating a framework, we (libraries and library associations) should agree upon some open protocols and (metadata) formats. To start with, here is a list of relevant, existing open standard APIs from my point of view:

Search: SRU/SRW (including CQL), OpenSearch, Z39.50

Harvest/Syndicate: OAI-PMH, RSS, Atom Syndication (also with ATOM Extensions)

Copy/Provide: unAPI, COinS, Microformats (not a real API but a way to provide data)

Upload/Edit: SRU Update, Atom Publishing Protocol

Identity Management: Shibboleth (and other SAML-based protocols), OpenID (see also OSIS)

For more complex applications, additional (REST)-APIs and common metadata standards need to be found (or defined) – but only if the application is just another kind of search, harvest/syndicate, copy/provide, upload/edit, or Identity Management.

P.S: I forgot NCIP, a „standard for the exchange of circulation data“. Frankly I don’t fully understand the meaning and importance of „circulation data“ and the standard looks more complex then needed. More on APIs for libraries can be found in WorldCat Developer Network, in the Jangle project and a DLF Working group on digital library APIs. For staying in the limited world if libraries, this may suffice, but on the web simplicity and availability of implementations matters – that’s why I am working on the SeeAlso linkserver protocol and now at a simple API to query availaibility information (more in August/September 2008).

P.P.S: A more detailed list of concrete library-related APIs was published by Roy Tennant based on a list by Owen Stephens.

P.P.S: And another list by Stephen Abram (SirsiDynix) from September 1st, 2009

OCLC Grid Services – first insights

28. November 2007 um 10:58 1 KommentarI am just sitting at a library developer meeting at OCLC|PICA in Leiden to get to know more about OCLC Service Grid, WorldCat Grid, or whatever the new service-oriented product portfolio of OCLC will be called. As Roy Tennant pointed out, our meeting is „completely bloggable“ so here we are – a dozen of European kind-of system librarians.

The „Grid Services“ that OCLC is going to provide is based on the „OCLC Services Architecture“ (OSA), a framework by which network services are built – I am fundamentally sceptical on additional frameworks, but let’s have a look.

The basic idea about services is to provide a set of small methods for a specific purpose that can be accessed via HTTP. People can then use this services and build and share unexpected application with them – a principle that is called Mashups.

The OCLC Grid portfolio will have four basic pillars:

network services: search services, metadata extraction, identity management, payment services, social services (voting, commenting, tagging…) etc.

registries and data resources: bibliographic registries, knowledge bases, registries of institutions etc. (see WorldCat registries)

reusable components: a toolbox of programming components (clients, samples, source code libraries etc.)

community: a developer network, involvement in open source developement etc.

Soon after social services were mentioned, at heavy discussion on reviews, and commenting started – I find the questions raised with user generated content are less technical but more social. Paul stressed that users are less and less interested in metadata but directly want the content of an information object (book, article, book chapter etc.). The community aspect is still somehow vague to me, we had some discussion about it too. Service oriented architecture also implies a different way of software engineering, which can partly be described by the „perpetual beta“ principle. I am very exited about this change and how it will be practised at OCLC|PICA. Luckily I don’t have to think about the business model and legal part which is not trivial: everyone wants to use services for free, but services need work to get established and maintained, so how do we best distribute the costs among libraries?

That’s all for the introduction, we will get into more concrete services later.

Neueste Kommentare