Erster expliziter Entwurf einer Digitalen Bibliothek (1959)

18. März 2018 um 23:38 5 KommentareIch recherchiere (mal wieder) zu Digitalen Bibliotheken und habe mich gefragt, wann der Begriff zum ersten mal verwendet wurde. Laut Google Books taucht (nach Aussortieren falsch-positiver Treffer) „digital library“ erstmals 1959 in einem Bericht für das US-Außenministerium auf. Die bibliographischen Daten habe ich bei Wikidata eingetragen. Der Bericht „The Need for Fundamental Research in Seismology“ wurde damals erstellt um zu Untersuchen wie mit seismischen Wellen Atomwaffentests erkannt werden können.

In Anhang 19 legte John Gerrard, einer von vierzehn an der Studie beteiligten Wissenschaftler, auf zwei Seiten den Bedarf an einem Rechenzentrum mit einem IBM 704 Rechner dar. Da das US-Regierungsdokument gemeinfrei ist hier die entsprechenden Seiten:

Bei der geplanten digitalen Bibliothek handelt es sich um eine Sammlung von Forschungsdaten mitsamt wissenschaftlicher Software um aus den Forschungsdaten neue Erkenntnisse zu gewinnen:

The following facilities should be available:

- A computer equivalent to the IBM 704 series, plus necessary peripheral equipment.

- Facilities for converting standard seismograms into digital form.

- A library of records of earthquakes and explosions in form suitable for machine analysis.

- A (growing) library of basic programs which have proven useful in investigations of seismic disturbances and related phenomena.

- …

Klingt doch ziemlich aktuell, oder? Gefallen hat mir auch die Beschreibung des Rechenzentrums als „open shop“ und der Hinweis „nothing can dampen enthusiasm for new ideas quite as effectively as long periods of waiting time“. Die Bezeichnung „digital library“ bezieht sich in dem Text primär auf die Sammlung von digitalisierten Seimsmogrammen. Am Ende der Empfehlung wird abweichend der Begriff „digitized library“ verwendet. Dies spricht dafür dass beide Begriffe synonym verwendet wurden. Interessanterweise bezieht sich „library“ aber auch auf die Sammlung von Computerprogrammen.

Ob das empfohlene Rechenzentrum mit digitaler Bibliothek realisiert wurde konnte ich leider nicht herausfinden (vermutlich nicht). Zum Autor Dr. John Gerrard ist mir nicht viel mehr bekannt als dass er 1957 als Director of Data Systems and Earth Science Research bei Texas Instruments (TI) arbeitete. TI wurde 1930 als „Geophysical Service Incorporated“ zur seismischen Erkundung von Erdöllagerstätten gegründet und bekam 1965 den Regierungsauftrag zur Ãœberwachung von Kernwaffentests (Projekt Vela Uniform). An Gerrard erinnert sich in diesem Interview ein ehemaliger Kollege:

John Gerrard: into digital seismology, and he could see a little bit of the future of digital processing and he talked about how that could be effective in seismology, he was right that this would be important in seismology

In Birmingham gibt es einen Geologen gleichen Namens, der ist aber erst 1944 geboren. Ich vermute dass Gerrard bei TI an der Entwicklung des Texas Instruments Automatic Computer (TIAC) beteiligt war, der speziell zur digitalen Verarbeitung seismischer Daten entwickelt wurde.

Der Einsatz von Computern in klassischen Bibliotheken kam übrigens erst mit der nächsten Rechner-Generation: das MARC-Format wurde in den 1960ern mit dem IBM System/360 entwickelt (von Henriette Avram, die zuvor bei der NSA auch mit IBM 701 gearbeitet hatte). Davor gabe es den fiktiven Bibliotheks-Computer EMMARAC (angelehnt an ENIAC und UNIVAC) in „Eine Frau, die alles weiß“ mit Katharine Hepburn als Bibliothekarin und Spencer Tracy als Computervertreter.

Bis Ende der 1980er taucht der Begriff „digital library“ bei Google Books übrigens nur vereinzelt auf.

TPDL 2011 Doctoral Consortium – part 3

25. September 2011 um 17:36 Keine KommentareSee also part 1 and part 2 of conference-blogging and #TPDL2011 on twitter.

My talk about general patterns in data was recieved well and I got some helpful input. I will write about it later. Steffen Hennicke, another PhD student of my supervisor Stefan Gradman, then talked about his work on modeling Archival Finding Aids, which are possibly expressed in EAD. The structure of EAD is often not suitable to answer user needs. For this reason Hennicke analyses EAD data and reference questions, to develope better structures that users can follow to find what they look for in archives. This is done in CIDOC-CRM as a high-level ontology and the main result will be an expanded EAD model in RDF. To me the problem of „semantic gaps“ is interesting, and I think about using some of Hennicke data as example to explain data patterns in my work.

The last talk by Rita Strebe was about aesthetical user experience of websites. One aim of her work is to measure the significance of aesthetical perception. In particular her hypothesis to be evaluated by experiments are:

H1: On a high level, the viscerally perceived visual aesthetics of websites effects

approach behaviour.

H2: On a low level, the viscerally perceived visual aesthetics of websites effects

avoidance behaviour.

Methods and preliminary results look valid, but the relation to digital libraries seems low and so was the expertise of Strebe’s motivation and methods among the participants. I suppose her work better fits to Human-Computer Interaction.

After the official part of the program Vladimir Viro briefly presented his music search engine peachnote.com, that is based on scanned muscial scores. If I was working in or with musical libraries, I would not hesitate to contact Viro! I also though about a search for free musical scores in Wikimedia framework. The Doctoral Consortium ended with a general discussion about dissertation, science, libraries, users, and everything, as it should be 🙂

Digital libraries sleep away the web 2.0

1. Oktober 2008 um 23:58 3 KommentareFrome time to time still publish on paper, so I have to deposit the publication in a repository to make it (and its metadata) available; mostly I use the „open archive for Library and Information Science“ named E-LIS. But each time I get angry because uploading and describing a submission is so complicated – especially compared to popular commercial repositories like flickr, slideshare youtube and such. These web applications pay a lot attention to usability – which sadly is of low priority in many digital libraries.

I soon realized that E-LIS uses a very old version (2.13.1) of GNU EPrints – EPrints 3 is available since December 2006 and there have been many updates since then. To find out whether it is usual to run a repository with such an outdated software, I did a quick study. The Registry of Open Access Repositories (ROAR) should list all relevant public repositories that run with EPrints. With 30 lines of Perl I fetched the list (271 repositories), and queried each repository via OAI to find out the version number. Here the summarized result in short:

I soon realized that E-LIS uses a very old version (2.13.1) of GNU EPrints – EPrints 3 is available since December 2006 and there have been many updates since then. To find out whether it is usual to run a repository with such an outdated software, I did a quick study. The Registry of Open Access Repositories (ROAR) should list all relevant public repositories that run with EPrints. With 30 lines of Perl I fetched the list (271 repositories), and queried each repository via OAI to find out the version number. Here the summarized result in short:

76 x unknown (script failed to get or parse OAI response), 8 x 2.1, 18 x 2.2, 98 x 2.3, 58 x 3.0, 13 x 3.1

Of 195 repositories (that I could successfully query and determine the version number of) only 13 use the newest version 3.1 (released September 8th). Moreover 124 still use version 2.3 or older. EPrints 2.3 was released before the web 2.0 hype in 2005! One true point of this web 2.0 bla is the concept of „perpetual beta“: release early but often and follow user feedback, so your application will quickly improve. But most repository operators do not seem to have a real interest in improvement and in their users!

Ok, I know that managing and updating a repository server is work – I would not be the right guy for such a job – but then don’t wail over low acceptance or wonder why libraries have an antiquated image. For real progress one should perpetually do user studies and engage in the developement of your software. Digital libraries with less resources should at least join the Community and follow updates to keep up to date.

P.S: E-LIS has updated its software now (November 2008). A lot of missing features remain but those need to be implemented in EPrints first.

Insight into the Digital Library Reference Model

18. September 2008 um 18:31 2 KommentareOn the first post-ECDL-conference day I participated in the Third Workshop on Foundation of Digital Libraries (DLFoundations 2008) that was organized by the DELOS Network of Excellence on Digital Libraries. One major work of DELOS is the DELOS Digital Library Reference Model (DLRM). The DLRM is an abstract model to describe digital libraries; it can be compared to the CIDOC Conceptual Reference Model (CIDOC-CRM) and the Functional Requirements for Bibliographic Records (FRBR) – and it shares some of their problems: a lack of availability and (resulting) a lack of implementations.

The DLRM is „defined“ in a 213-page PDF-file – this is just not usable! Have a look at W3C or IETF how nowadays standards are defined. As DLRM is a conceptual model, you must also provide a RDF representation or it is just inavailable for serious applications on the web. And of course the silly copyright statement should be removed in favor of a CC-license. That’s the formal part. Summarizing the content of DLRM there are 218 concepts and 52 relations – which is far too much to start with. But: there are some really useful ideas behind DLRM.

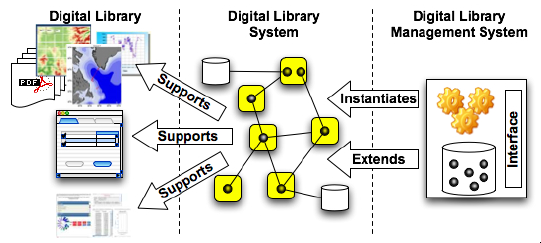

The reference model includes a division of „digital library“ into three levels of conceptualization (see image): first the visible digital library (the collection that users work with), second the digital library system (the software application that is installed and configured to run a digital library), and third the digital library management system (the software system that supports the production and administration of digital libraries). This division can be helpful to understand and talk about digital libraries – although I am not sure whether the division between digital library systems and digital library management systems is a such a good one.

Beside general talks about the Digital Library Reference Model the workshop provided some experience from practise by Wolfram Hostmann (DRIVER project) and by Georg Eckes (Deutsches Filminstitut) – never underestimate good real world examples! The most refreshing talk was given by Joan Lippincott (Coalition of Networked Information). She pointed out that much more then traditional repositories can be viewed as digital libraries. Especially user-generated content can constitute a digital library. A useful model for digital libraries should also fit for collections at Flickr, YouTube, Wikis, Weblogs etc. and user can mash up resources to create new digital library services, for instance the species search engine iSpecies. She is sooo right! In addition Joan mentioned initiatives to broaden the use of authority files and identity management. Another direct hit! If digital libraries only focus on interoperability with other „official“ digital libraries they will not remain. Libraries are only one little player in the digital knowledge environment and their infrastructure is not defined only by them.

I enjoyed the workshop, I really like the digital library community and I am happy to be part of it. But some parts still seem to live in an ivory tower. If the digital library reference model does not quickly get adopted to real applications (both repositories like those build with DSpace, Fedora, EPrints etc. and open systems like YouTube, Wikipedia, Slideshare…), it is nothing but an interesting idea. The digital revolution is taking place anyway, so let’s better be part of it!

P.S: The slides will soon be available at the Workshop’s website.

Jangle-API für Bibliothekssysteme

10. Juli 2008 um 15:00 2 KommentareIm VuFind-Projekt wird überlegt, Jangle als allgemeine Schnittstelle für Bibliothekssysteme zu verwenden, anstatt für jedes System die Anbindung neu anzupassen. Jangle wird von Ross Singer (Talis) vorangetrieben und steht anscheinend sowohl in Konkurrenz als auch in Kooperation mit der DLF working group on digital library APIs. Ob Jangle etwas wird und ob es sich durchsetzt, ist noch offen – zumindest ist mehr technischer Sachverstand dabei als bei anderen bibliothekarischen Standards.

Ich halte es zumindest für wichtig, in etwa auf dem Laufenden zu sein und bei Bedarf zu versuchen, Einfluss zu nehmen, falls die Entwicklung völlig an den eigenen Bedürfnissen vorbeigeht. So ganz verstanden habe ich Jangle allerdings bisher noch nicht. Statt eine neue Gesamt-API zu entwickeln, sollte meiner Meinung nach besser bei den existierenden Schnittstellen aufgeräumt werden – anschließend können diese dann ja in Jangle o.Ä. zusammengefasst werden.

Larry stellt Jangle und Primo gegenüber: auf der einen Seite der systematische OpenSource-Ansatz, in den man sich erstmal einarbeiten muss und der dafür insgesamt effizienter und flexibler ist und auf der anderen Seite das teure, unflexible Gesamtprodukt, das dafür schick aussieht. Leider geht es bei den Entscheidungsträgern meist primär um die Fassade, so dass sich OpenSource nur langsam (aber sicher) durchsetzt.

First impressions from the Europeana meeting

23. Juni 2008 um 12:02 Keine KommentareToday and tomorrow I participate in a Europeana/EDLnet conference in Den Haag. Europeana is a large EU-funded project to create a „European Digital Library“. Frankly speaking I cannot give a simple definition of Europeana because in the first instance it is just a buzzword. You can ask whether library portals have a future at all, and Europeana has many ingredients that may help to make it fail: a large ambitious plan, a tight schedule, and many different participants with different languages, cultures and needs. The current Europeana Outline Function Specification partly reads like a magic wishlist. Instead of following one simple, good idea from bottom-up, it looks like the attempt to follow many ideas in a bottom-up way.

But if you see Europeana less as a monolithical project but as a network of participants (libraries, museums, and archives) that try to agree on standards to improve interoperability, the attempt seems more promising. In his introductionary speech Jill Cousins (director of Europeana) stressed the importance of APIs and ways to export information from Europeana so other institutions can build their websites and mashups with services and content from Europeana. I hope that open content respositories like Wikimedia Commons and Wikisource can act as both as source and as target of exchange with the European Digital Library. What I also found interesting is that Google and Wikipedia are seen as the default role models or at least important examples of portals to compare with. The talks I have seen so far give me the impression that the view on possibilities and roles for libraries, museums, and archives are more realistic than I thought. One of their strength is that they hold the content and are responsible for it – in contrast to user generated collections like YoutTube, Slideshare, and (partly) Wikipedia. But in general cultural institutions are only one player among others on the web – so they also need to develop further and „let the data flow“. If the Europeana project helps in this development, it is a good project, no matter if you call it a „European Digital Library“ or not.

The next short talks were about CIDOC-CRM and about OAI-ORE – both complex techniques that you cannot easily describe in short time (unless you are a really good Wikipedia author ;-), but at the least my imagination of them has been improved.

Digitale Bibliotheken vor 8 Jahren

27. Mai 2008 um 02:52 2 KommentareIch weiß nicht mehr wie genau Google mich auf diese Präsentation gebracht hat und eigentlich ist es ja uncool, auf Folien zu verweisen, die nicht auf Slideshare liegen (archiviert das eigentlich endlich mal jemand); aber irgendwie hat mich schon etwas beeindruckt, wie wenig von dem Grundlagen-Workshop zum Thema „Digitale Bibliotheken“ auf der zweiten CARNet-Konferenz (Zagreb, 2000) bislang veraltet ist. Trotz aller Berechtigung von Initiativen wie „Bibliothek 2.0“ gilt nämlich: die grundlegenden Entwicklungen im Bibliothekswesen sind längerfristig und schon seit mehr als 10 Jahren abzusehen. Die Automatisierung schreitet (auch) in Bibliotheken kontinuierlich immer weiter voran und was nicht digital wird, wird marginal. Zugriff sind nicht mehr auf einen Ort beschränkt, alles ist beliebig kopierbar und auch die Kommunikation findet immer mehr im digitalen Raum statt. Soweit, so vorhersagbar.

Ebenso beeindruckend wie die Kontinuität dieser Entwicklung, ist allerdings, dass bei den Beteiligten in (digitalen) Bibliotheken oft elementarste Grundlagen fehlen. Mein Standardbeispiel ist (immer wieder durch die Praxis bestätigt!) die verbreitete Unkenntnis der grundlegenden Unterschiede zwischen einer einfachen Suchmaschine und einer Metasuchmaschine. Um unabhängig von allen Hypes ein eigenes Gefühl für diese und andere Grundbegriffe zu bekommen (weitere ad-hoc-Beispiele: „Datenformat“, „Harvesting“, „Feed“, „Community“…) muss man sich immer wieder damit beschäftigen. Gut, wenn es also Fortbildungen, wie den Eingangs genannten Workshop gibt. Einen kleinen Beitrag liefert die Reihe Lernen 2.0 zum Selbststudium.

Working group on digital library APIs and possible outcomes

13. April 2008 um 14:48 3 KommentareLast year the Digital Library Federation (DLF) formed the „ILS Discovery Interface Task Force„, a working group on APIs for digital libraries. See their agenda and the current draft recommendation (February, 15th) for details [via Panlibus]. I’d like to shortly comment on the essential functions they agreed on at a meeting with major library system (ILS) vendors. Peter Murray summarized the functions as „automated interfaces for offloading records from the ILS, a mechanism for determining the availability of an item, and a scheme for creating persistent links to records.“

On the one hand I welcome if vendors try to agree on (open) standards and service oriented architecture. On the other hand the working group is yet another top-down effort to discuss things that just have to be implemented based on existing Internet standards.

1. Harvesting: In the library world this is mainly done via OAI-PMH. I’d also consider RSS and Atom. To fetch single records, there is unAPI – which the DLF group does not mention. There is no need for any other harvesting API – missing features (if any) should be integrated into extensions and/or next versions of OAI-PMH and ATOM instead of inventing something new. P.S: Google Wave shows what to expect in the next years.

2. Search: There is still good old overblown Z39.50. The near future is (slightly overblown) SRU/SRW and (simple) OpenSearch. There is no need for discussion but for open implementations of SRU (I am still waiting for a full client implementation in Perl). I suppose that next generation search interfaces will be based on SPARQL or other RDF-stuff.

2. Availability: The announcement says: „This functionality will be implemented through a simple REST interface to be specified by the ILS-DI task group“. Yes, there is definitely a need (in december I wrote about such an API in German). However the main point is not the API but to define what „availability“ means. Please focus on this. P.S: DAIA is now available.

3. Linking: For „Linking in a stable manner to any item in an OPAC in a way that allows services to be invoked on it“ (announcement) there is no need to create new APIs. Add and propagate clean URIs for your items and point to your APIs via autodiscovery (HTML link element). That’s all. Really. To query and distribute general links for a given identifier, I created the SeeAlso API which is used more and more in our libraries.

Furthermore the draft contains a section on „Patron functionality“ which is going to be based on NCIP and SIP2. Both are dead ends in my point of view. You should better look at projects outside the library world and try to define schemas/ontologies for patrons and patron data (hint: patrons are also called „customer“ and „user“). Again: the API itself is not underdefined – it’s the data which we need to agree on.

OCLC Grid Services – first insights

28. November 2007 um 10:58 1 KommentarI am just sitting at a library developer meeting at OCLC|PICA in Leiden to get to know more about OCLC Service Grid, WorldCat Grid, or whatever the new service-oriented product portfolio of OCLC will be called. As Roy Tennant pointed out, our meeting is „completely bloggable“ so here we are – a dozen of European kind-of system librarians.

The „Grid Services“ that OCLC is going to provide is based on the „OCLC Services Architecture“ (OSA), a framework by which network services are built – I am fundamentally sceptical on additional frameworks, but let’s have a look.

The basic idea about services is to provide a set of small methods for a specific purpose that can be accessed via HTTP. People can then use this services and build and share unexpected application with them – a principle that is called Mashups.

The OCLC Grid portfolio will have four basic pillars:

network services: search services, metadata extraction, identity management, payment services, social services (voting, commenting, tagging…) etc.

registries and data resources: bibliographic registries, knowledge bases, registries of institutions etc. (see WorldCat registries)

reusable components: a toolbox of programming components (clients, samples, source code libraries etc.)

community: a developer network, involvement in open source developement etc.

Soon after social services were mentioned, at heavy discussion on reviews, and commenting started – I find the questions raised with user generated content are less technical but more social. Paul stressed that users are less and less interested in metadata but directly want the content of an information object (book, article, book chapter etc.). The community aspect is still somehow vague to me, we had some discussion about it too. Service oriented architecture also implies a different way of software engineering, which can partly be described by the „perpetual beta“ principle. I am very exited about this change and how it will be practised at OCLC|PICA. Luckily I don’t have to think about the business model and legal part which is not trivial: everyone wants to use services for free, but services need work to get established and maintained, so how do we best distribute the costs among libraries?

That’s all for the introduction, we will get into more concrete services later.

Second day at MTSR

18. Oktober 2007 um 18:46 Keine KommentareIt is already a week ago (conference blogging should be published immediately) so I better summarize my final notes of the MTSR conference 2007: Beitrag Second day at MTSR weiterlesen…

Neueste Kommentare