Unique Identifiers for Authors, VIAF and Linked Open Data

20. Mai 2009 um 15:53 1 KommentarThe topic of unique identifiers for authors is getting more and more attention on the Web. Martin Fenner listed some research papers about it and did a quick poll – you can see the results in a short presentation [via infobib]. What striked me about the results is how unknown existing traditional identifier systems for authors are: Libraries manage so called „authority files“ since years. The German Wikipedia has a cooperation with the German National Library to link biliographic Wikipedia articles [de] with the German name authority file since 2005 and there is a similar project in the Czech Wikipedia.

Maybe name authority files of libraries are so unknown because they have not been visible on the Web – but this changes. An important project to combine authority files is the Virtual International Authority File (VIAF). At the moment it already contains mappings between name authority files of six national libraries (USA, Germany, France, Sweden, Czech Republic, and Israel) and more are going to be added. At an ELAG 2008 Workshop in Bratislava I talked with VIAF project manager Thomas Hickey (OCLC) about also getting VIAF and its participating authority files into the Semantic Web. He just wrote about recent changes in VIAF: by now it almost contains 8 million records!

So why are people thinking about creating other systems of unique identifiers for authors if there already is an infrastructure? The survey that Martin did showed, that a centralized registry is wished. VIAF is an aggregator of distributed authority files which are managed by national libraries. This architecture has several advantages, for instance it is non-commercial and data is managed where it can be managed best (Czech librarians can better identify Czech authors, Israeli librarians can better identify authors from Israel, and so on). One drawback is that libraries are technically slow – many of them have not really switched to the Web and the digital age. For instance up to now there are no official URIs for Czech and Israeli authority records and VIAF is not connected yet to Linked Open Data. But the more people reuse library data instead of reinventing wheels, the faster and easier it gets.

For demonstration purpose I created a SeeAlso-wrapper for VIAF that extracts RDF triples of the mappings. At http://ws.gbv.de/seealso/viafmappings you can try out by submitting authority record URIs or the authority record codes used at VIAF. For instance a query for LC|n 79003362 in Notation3 to get a mapping for Goethe. Some returned URIs are also cool URLs, for instance at the DNB or the VIAF URI itself. At the moment owl:sameAs is used to specify the mappings, maybe the SKOS vocabulary provides better properties. You can argue a lot about how to encode information about authors, but the unique identifiers – that you can link to – already exist!

Who identifies the identifiers?

10. Mai 2009 um 16:39 7 KommentareA few days ago, after a short discussion on Twitter, Ross Singer posted a couple of open questions about identifiers for data formats on code4lib and other related mailing lists. He outlined the problem that several APIs like Jangle, unAPI, SRU, OpenURL, and OAI-PMH use different identifiers to specify the format of data that is transported (MARC-XML, Dublin Core, MODS, BibTeX etc.). It is remarable that all these APIs are more or less relevant only in the libraries sector while the issue of data formats and its identifiers is also relevant in other areas – looks like the ivory tower of library standards is still beeing build on.

The problem Ross issued is that there is little coordination and each standard governs its own registry of data format identifiers. An inofficial registry for unAPI [archive] disappeared (that’s why I started the discussion), there is a registry for SRU, a registry for OpenURL, and a list for Jangle. In OAI-PMH and unAPI each service hosts its own list of formats, OAI-PMH includes a method to map local identifier to global identifiers.

On code4lib several arguments and suggestions where raised which almost provoced me to a rant on library standards in general (everyone want’s to define but noone likes to implement and reuse. Why do librarians ignore W3C and IETF?). Identifiers for data formats should neither be defined by creators of transport protocols nor do we need yet another über-registry. In my point of view the problem is less technical but more social. Like Douglas Campbell writes in Identifying the identifiers, one of the rare papers on identifier theory: it’s not a technology issue but a commitment issue.

First there is a misconception about registries of data format identifiers. You should distinguish descriptive registries that only list identifiers and formats that are defined elsewhere and authoritative registries that define identifiers and formats. Yes: and formats. It makes no sense to define an identifier and say that is stands for data format X if you don’t provide a specification of format X (either via a schema or via a pointer to a schema). This already implies that the best actor to define a format identifier is the creator of the format itself.

Second local identifiers that depend on context are always problematic. There is a well-established global identifier system called Uniform Resource Identifier (URI) and there is no excuse not to use URIs as identifiers but incapability, dullness, laziness, or ignorance. The same reasons apply if you create a new identifier for a data format that already has one. One good thing about URI is that you can always find out who was responsible for creating a given identifier: You start with the URI Scheme and drill down the namespaces and standards. I must admin that this process can be laborious but at least it makes registries of identifiers descriptive for all identifiers but the ones in their own namespace.

Third you must be clear on the definition of a format. For instance the local identifier „MARC“ does not refer to a format but to many variants (USMARC, UNIMARC, MARC21…) and encodings (MARCXML/MARC21). This is not unusual if you consider that many formats are specializations of other formats. For instance ATOM (defined by RFC4287 and RFC5023, identified either its Mime Type „application/atom+xml“ which can could expressed as URI http://www.iana.org/assignments/media-types/application/atom%2Bxml or by its XML Namespace „http://www.w3.org/2005/Atom“)* is extended from XML (specified in http://www.w3.org/TR/xml [XML 1.0] and http://www.w3.org/TR/xml11 [XML 1.1], identified by this URLs or by the Mime Type „application/xml“ which is URI http://www.iana.org/assignments/media-types/application/xml)*.

The problem of identifying the right identifiers for data formats can be reduced to two fundamental rules of thumb:

1. reuse: don’t create new identifiers for things that already have one.

2. document: if you have to create an identifier describe its referent as open, clear, and detailled as possible to make it reusable.

If there happen to exist multiple identifiers for one thing, choose the one that is documented and adopted best. There will always be multiple identifiers for the same thing – don’t make it worse.

*Footnote: The identification of Internet Media Types with URIs that start with http://www.iana.org/assignments/media-types/ is neither widely used nor documented well but it’s the most official URI form that I could find. If for a particular format there is a better identifier – like an XML or RDF namespace – then you should use that, but if there is nothing but a Mime Type then there is no reason to create a new URI on your own.

Wikimania 2009: Call for Participation

2. März 2009 um 22:36 Keine KommentareLast week the Wikimania 2009 team announced its Call for Participation for the annual, international conference of the Wikimedia Foundation. Wikimania 2009 will take place August 25-28, 2009 in Buenos Aires, Argentina at the Centro Cultural General San Martín.

Deadline for submitting workshop, panel, and presentation submissions is April 15th, posters, open space discussions, and artistic works have time until April 30th. There is a Casual Track, for members of wiki communities and interested observers to share their own experiences and thoughts and to present new ideas; and the Academic Track, for research based on the methods of scientific studies exploring the social, content or technical aspects of Wikipedia, the other Wikimedia projects, or other massively collaborative works, as well as open and free content creation and community dynamics more generally.

Wikimania 2010 will take place in Europa again in one of Amsterdam, Gdańsk, and Oxford (unless another city pops up until the end of this week).

European kind-of-Code4Lib conference

12. Februar 2009 um 16:01 Keine KommentareOn April 22-24th the European Library Automation Group Conference (ELAG 2009) will take place at the University Library in Bratislava. This is the 33rd ELAG and will be my first. I am happy to see that digital library conferences and developer meetups don’t only take place in the US, hopefully ELAG is like the Code4Lib Conference that takes place at February 23-26th this year for the 4th time – maybe we could have a joint Code4lib Europa / ECDL somtime in the next years? But first „meet us at ELAG 2009„!

Other library-related events in Germany that I will participate in the next month, include 10th Sun Summit Bibliotheken (March 18/19th in Kassel), ISI 2009 (April, 1-3rd in Konstanz), BibCamp 2009 (May, 15-17th in Stuttgart) and the annual Bibliothekartag (June, 2-5th in Erfurt). I am nore sure yet about the European Conference on Digital Libraries (ECDL 2009, September 27th – Oktober 2nd in Corfu, Greece) – the Call for Contributions is open until March 21st.

Ariadne article about SeeAlso linkserver protocol

13. November 2008 um 11:32 Keine Kommentare The current issue of Ariadne which has just been published contains an article about the „SeeAlso“ linkserver protocol: Jakob Voß: „SeeAlso: A Simple Linkserver Protocol„, Ariadne Issue 57, 2008.

The current issue of Ariadne which has just been published contains an article about the „SeeAlso“ linkserver protocol: Jakob Voß: „SeeAlso: A Simple Linkserver Protocol„, Ariadne Issue 57, 2008.

SeeAlso combines OpenSearch and unAPI to a simple API that delivers list of links. You can use it for dynamically embedding links to recommendations, reviews, current availability, reviews, search completion suggestions, etc. It’s no rocket science but I found a well defined API with reusable server and client better then having to hack a special format and lookup syntax for each single purpose.

The reference client is written in JavaScript and the reference server is written in Perl. Implementing it in any other language should not be complicated. I’d be happy to get some feedback either in form of code, applications, or criticism. 🙂 I noted that SeeAlso::Server is the only implementation of unAPI at CPAN so far – if someone is interested, we could extract parts into an independent unAPI package. The WWW::OpenSearch::Description package is also worth to consider for use in SeeAlso::Server.

Date of Wikimania 2009

21. Oktober 2008 um 00:18 3 KommentareAs already announced two weeks ago (but not blogged before, only on identi.ca) Wikimania 2009 will take place August 25-28, 2009 in Buenos Aires, Argentina at the Centro Cultural General San Martín.

Quick overview of Open Access LIS journals

14. Oktober 2008 um 21:12 Keine KommentareI just stumbled upon the article „Evaluating E-Contents Beyond Impact Factor – A Pilot Study Selected Open Access Journals In Library And Information Science“ by Bhaskar Mukherjee (Journal of Electronic Publishing, vol. 10, no. 2, Spring 2007). It contains a detailed analysis of five Open Archive Journals in Library and Information Science, namely Ariadne, D-Lib Magazine, First Monday, Information Research, and Information Technology and Disabilities.

A more comprehensive list of OA journals in the LIS field can be found in the Directory of Open Access Journals (DOAJ). It currently lists 89 journals. Not all of them are highly relevant and lively so how do you compare? The traditional journal impact factor is oviously rubbish and most journals are not covered anyway. In a perfect world you could easily harvest all articles via OAI-PMH, extract all references via their identifiers and create a citation network of the Open Access world of library science – maybe you should also include some repositories like E-LIS. But maybe you can measure the impact in other ways. Why not including blogs? Instead of laboriously writing a full research paper for JASIST to „evaluate the suitability of weblogs for determining the impact of journals“ (ad-hoc title) I quickly used Google Blogsearch to count some links from weblog entries to some journal pages:

- First Monday

- 4,791

- D-Lib Magazine

- 2,366

- Ariadne

- 1,579

- Information Research

- 372

- Issues in Science & Technology Librarianship

- 249

- Code4Lib Journal

- 209

- Libres

- 61

- Journal of Library and Information Technology

- 10

I clearly admit that my method is insufficient: you first have to evaluate Google Blogsearch, check URL patterns, divide the number by age or by number of articles etc. Fortunately my blog is not peer-reviewed. But you can comment!

A note on German OA LIS journals: It’s a shame that while German librarians basically read German library journals only two of them are truly Open Access: Beside the more specialized GMS Medizin-Bibliothek-Information there is LIBREAS (with blog count 71). Its current CfP is on a special issue about Open Access and the humanities!

P.S: Why do so many LIS journals use insane and ugly URLs instead of clean and stable ones like http://JOURNALNAME.TDL/ISSUE/ARTICLE? This is like printing a journal on toilet paper! Apache rewrite, virtual hosts, and reverse proxies are there for a good reason.

P.P.S: The IFLA had a Section on Library and Information Science Journals 2002-2005.

Digital libraries sleep away the web 2.0

1. Oktober 2008 um 23:58 3 KommentareFrome time to time still publish on paper, so I have to deposit the publication in a repository to make it (and its metadata) available; mostly I use the „open archive for Library and Information Science“ named E-LIS. But each time I get angry because uploading and describing a submission is so complicated – especially compared to popular commercial repositories like flickr, slideshare youtube and such. These web applications pay a lot attention to usability – which sadly is of low priority in many digital libraries.

I soon realized that E-LIS uses a very old version (2.13.1) of GNU EPrints – EPrints 3 is available since December 2006 and there have been many updates since then. To find out whether it is usual to run a repository with such an outdated software, I did a quick study. The Registry of Open Access Repositories (ROAR) should list all relevant public repositories that run with EPrints. With 30 lines of Perl I fetched the list (271 repositories), and queried each repository via OAI to find out the version number. Here the summarized result in short:

I soon realized that E-LIS uses a very old version (2.13.1) of GNU EPrints – EPrints 3 is available since December 2006 and there have been many updates since then. To find out whether it is usual to run a repository with such an outdated software, I did a quick study. The Registry of Open Access Repositories (ROAR) should list all relevant public repositories that run with EPrints. With 30 lines of Perl I fetched the list (271 repositories), and queried each repository via OAI to find out the version number. Here the summarized result in short:

76 x unknown (script failed to get or parse OAI response), 8 x 2.1, 18 x 2.2, 98 x 2.3, 58 x 3.0, 13 x 3.1

Of 195 repositories (that I could successfully query and determine the version number of) only 13 use the newest version 3.1 (released September 8th). Moreover 124 still use version 2.3 or older. EPrints 2.3 was released before the web 2.0 hype in 2005! One true point of this web 2.0 bla is the concept of „perpetual beta“: release early but often and follow user feedback, so your application will quickly improve. But most repository operators do not seem to have a real interest in improvement and in their users!

Ok, I know that managing and updating a repository server is work – I would not be the right guy for such a job – but then don’t wail over low acceptance or wonder why libraries have an antiquated image. For real progress one should perpetually do user studies and engage in the developement of your software. Digital libraries with less resources should at least join the Community and follow updates to keep up to date.

P.S: E-LIS has updated its software now (November 2008). A lot of missing features remain but those need to be implemented in EPrints first.

Dublin Core conference 2008 started

23. September 2008 um 12:20 4 KommentareYesterday the Dublin Core Conference 2008 (DC 2008) started in Berlin. The first day I spent with several Dublin Core Tutorials and with running after my bag, which I had forgotten in the train. Luckily the train ended in Berlin so I only had to get to the other part of the town to recover it! The rest of the day I visited the DC-Tutorials by Pete Johnston and Marcia Zeng (slides are online as PDF). The tutorials were right but somehow lost a bit between theory and practise (see Paul’s comment) – I cannot tell details but there must be a way to better explain and summarize Dublin Core in short. The problem may be in a fuzzy definition of Dublin Core. To my taste there are far to many „cans“, „shoulds“, and „mays“ instead of formal „musts“. I would also stress more the importance of publicating stable URIs for everything and using syntax schemas.

What really annoys me on DC is the low committement of the Dublin Core Community to RDF. RDF is not propagated as fbase but only as one possible way to encode Dublin Core. The same way you could have argued in the early 1990s that HTTP/HTML is just one framework to build on. That’s right, and of course RDF is not the final answer to metadata issues – but it’s the state-of-the-art to encode structured data on the web. I wonder when the Dublin Core Community lost tight connection with the W3C/RDF community (which on her part was spoiled by the XML community). In official talks you don’t hear this hidden stories of the antipathies and self-interests in standardization.

The first keynote that I heard at day 2 was given by Jennifer Trant about results of steve.museum – one of the best projects that analyzes tagging in real world environments. Data, software and publications are available to build upon. The second talk – „Encoding Application Profiles in a Computational Model of the Crosswalk“ by Carol Jean Godby (PDF-slides) – was interesting as well. In our library service center we deal a lot with translations (aka mappings, crosswalks etc.) between metadata formats, so the crosswalk web service by OCLC and its description language may be of large use – if it is proberly documented and supported. After this talk Maria Elisabete Catarino reported with „Relating Folksonomies with Dublin Core“ (PDF-slides) from a study on the purposes and usage of social tagging and whether/how tags could be encoded by DC terms.

At Friday we will hold a first Seminar on User Generated Matadata with OpenStreetmap, Wikipedia, BibSonomy and The Open Library – looking forward to it!

P.S: Pete Johnston’s slides on DC basic concepts are now also available at slideshare [via his blog]

Insight into the Digital Library Reference Model

18. September 2008 um 18:31 2 KommentareOn the first post-ECDL-conference day I participated in the Third Workshop on Foundation of Digital Libraries (DLFoundations 2008) that was organized by the DELOS Network of Excellence on Digital Libraries. One major work of DELOS is the DELOS Digital Library Reference Model (DLRM). The DLRM is an abstract model to describe digital libraries; it can be compared to the CIDOC Conceptual Reference Model (CIDOC-CRM) and the Functional Requirements for Bibliographic Records (FRBR) – and it shares some of their problems: a lack of availability and (resulting) a lack of implementations.

The DLRM is „defined“ in a 213-page PDF-file – this is just not usable! Have a look at W3C or IETF how nowadays standards are defined. As DLRM is a conceptual model, you must also provide a RDF representation or it is just inavailable for serious applications on the web. And of course the silly copyright statement should be removed in favor of a CC-license. That’s the formal part. Summarizing the content of DLRM there are 218 concepts and 52 relations – which is far too much to start with. But: there are some really useful ideas behind DLRM.

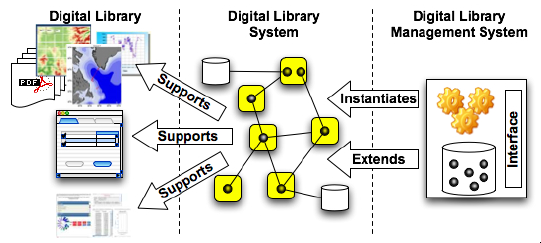

The reference model includes a division of „digital library“ into three levels of conceptualization (see image): first the visible digital library (the collection that users work with), second the digital library system (the software application that is installed and configured to run a digital library), and third the digital library management system (the software system that supports the production and administration of digital libraries). This division can be helpful to understand and talk about digital libraries – although I am not sure whether the division between digital library systems and digital library management systems is a such a good one.

Beside general talks about the Digital Library Reference Model the workshop provided some experience from practise by Wolfram Hostmann (DRIVER project) and by Georg Eckes (Deutsches Filminstitut) – never underestimate good real world examples! The most refreshing talk was given by Joan Lippincott (Coalition of Networked Information). She pointed out that much more then traditional repositories can be viewed as digital libraries. Especially user-generated content can constitute a digital library. A useful model for digital libraries should also fit for collections at Flickr, YouTube, Wikis, Weblogs etc. and user can mash up resources to create new digital library services, for instance the species search engine iSpecies. She is sooo right! In addition Joan mentioned initiatives to broaden the use of authority files and identity management. Another direct hit! If digital libraries only focus on interoperability with other „official“ digital libraries they will not remain. Libraries are only one little player in the digital knowledge environment and their infrastructure is not defined only by them.

I enjoyed the workshop, I really like the digital library community and I am happy to be part of it. But some parts still seem to live in an ivory tower. If the digital library reference model does not quickly get adopted to real applications (both repositories like those build with DSpace, Fedora, EPrints etc. and open systems like YouTube, Wikipedia, Slideshare…), it is nothing but an interesting idea. The digital revolution is taking place anyway, so let’s better be part of it!

P.S: The slides will soon be available at the Workshop’s website.

Neueste Kommentare