On the way to a library ontology

11. April 2013 um 15:02 2 KommentareI have been working for some years on specification and implementation of several APIs and exchange formats for data used in, and provided by libraries. Unfortunately most existing library standards are either fuzzy, complex, and misused (such as MARC21), or limited to bibliographic data or authority data, or both. Libraries, however, are much more than bibliographic data – they involve library patrons, library buildings, library services, library holdings, library databases etc.

During the work on formats and APIs for these parts of library world, Patrons Account Information API (PAIA) being the newest piece, I found myself more and more on the way to a whole library ontology. The idea of a library ontology started in 2009 (now moved to this location) but designing such a broad data model from bottom would surely have lead to yet another complex, impractical and unused library standard. Meanwhile there are several smaller ontologies for parts of the library world, to be combined and used as Linked Open Data.

In my opinion, ontologies, RDF, Semantic Web, Linked Data and all the buzz is is overrated, but it includes some opportunities for clean data modeling and data integration, which one rarely finds in library data. For this reason I try to design all APIs and formats at least compatible with RDF. For instance the Document Availability Information API (DAIA), created in 2008 (and now being slightly redesigned for version 1.0) can be accessed in XML and in JSON format, and both can fully be mapped to RDF. Other micro-ontologies include:

- Document Service Ontology (DSO) defines typical document-related services such as loan, presentation, and digitization

- Simple Service Status Ontology (SSSO) defines a service instance as kind of event that connects a service provider (e.g. a library) with a service consumer (e.g. a library patron). SSSO further defines typical service status (e.g. reserved, prepared, executed…) and limitations of a service (e.g. a waiting queue or a delay

- Patrons Account Information API (PAIA) will include a mapping to RDF to express basic patron information, fees, and a list of current services in a patron account, based on SSSO and DSO.

- Document Availability Information API (DAIA) includes a mapping to RDF to express the current availability of library holdings for selected services. See here for the current draft.

- A holdings ontology should define properties to relate holdings (or parts of holdings) to abstract documents and editions and to holding institutions.

- GBV Ontology contains several concepts and relations used in GBV library network that do not fit into other ontologies (yet).

- One might further create a database ontology to describe library databases with their provider, extent APIs etc. – right now we use the GBV ontology for this purpose. Is there anything to reuse instead of creating just another ontology?!

The next step will probably creation of a small holdings ontology that nicely fits to the other micro-ontologies. This ontology should be aligned or compatible with the BIBFRAME initiative, other ontologies such as Schema.org, and existing holding formats, without becoming too complex. The German Initiative DINI-KIM has just launched a a working group to define such holding format or ontology.

Linked local library data simplified

10. Januar 2012 um 14:53 1 KommentarA few days ago Lukas Koster wrote an article about local library linked data. He argues that bibliographic data from libraries data as linked data is not „the most interesting type of data that libraries can provide“. Instead „library data that is really unique and interesting is administrative information about holdings and circulation“. So libraries „should focus on holdings and circulation data, and for the rest link to available bibliographic metadata as much as possible.“ I fully agree with this statements but not with the exact method how do accomplish the publication of local library data.

Among other project, Koster points to LibraryCloud to aggregate and deliver library metadata, but it looks like they reinvent yet more wheels in form of their own APIS and formats for search and for bibliographic description. Maybe I am wrong about this project, as they just started to collect holding and circulation data.

At the recent Semantic Web in Bibliotheken conference, Magnus Pfeffer gave a presentation about „Publishing and consuming library loan information as linked open data“ (see slides) and I talked about a Simplified Ontology for Bibliographic Resources (SOBR) which is mainly based on the DAIA data model. We are going to align both data models and I hope that the next libraries will first look at these existing solutions instead of inventing yet another data format or ontology. Koster’s proposal, however, looks like such another solution: he argues that „we need an extra explicit level to link physical Items owned by the library or online subscriptions of the library to the appropriate shared network level“ and suggests to introduce a „holding“ level. So there would be five levels of description:

- Work

- Expression

- Manifestation

- Holding

- Item

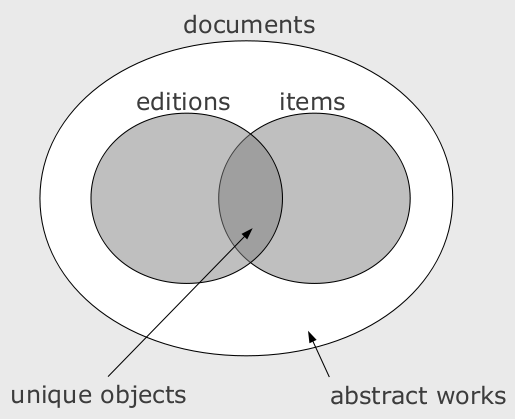

Apart from the fact that at least one of Work, Expression, Manifestation is dispensable, I disagree with a Holding level above the Item. My current model consists of at most three levels of documents:

- document as abstract work (frbr:Work, schema:CreativeWork…)

- bibliographic document (frbr:Manifestation, sobr:Edition…)

- item as concrete single copy (frbr:Item…)

The term „level“ is misleading because these classes are not disjoint. I depicted their relationship in a simple Venn diagram:

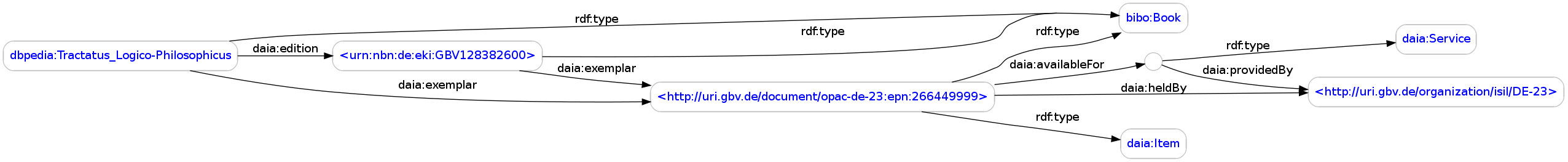

For local library data, we are interested in single items, which are copies of general documents or editions. Where do Koster’s „holding“ entities fit into this model? He writes „a specific Holding in this way would indicate that a specific library has one or more copies (Items) of a specific edition of a work (Manifestation), or offers access to an online digital article by way of a subscription.“ The core concepts as I read them are:

- „one or more copies (Items)“ = frbr:Item

- „specific edition of a work (Manifestation)“ = sobr:Edition or frbr:Manifestation

- „has one […] or offer access to“ = ???

Instead of creating another entity for holdings, you can express the ability „to have one or offer access to“ by DAIA Services. The class daia:Service can be used for an unspecified service and more specific subclasses such as loan, presentation, and openaccess can be used if more is known. Here is a real example with all „levels“:

<http://dbpedia.org/resource/Tractatus_Logico-Philosophicus>

a bibo:Book ;

daia:edition <urn:nbn:de:eki:GBV128382600> ;

daia:exemplar

<http://uri.gbv.de/document/opac-de-23:epn:266449999> .

<urn:nbn:de:eki:GBV128382600> a bibo:Book ;

daia:exemplar

<http://uri.gbv.de/document/opac-de-23:epn:266449999> .

<http://uri.gbv.de/document/opac-de-23:epn:266449999>

a bibo:Book, daia:Item ;

daia:heldBy <http://uri.gbv.de/organization/isil/DE-23> ;

daia:availableFor [

a daia:Service ;

daia:providedBy <http://uri.gbv.de/organization/isil/DE-23>

] .

I have only made up the RDF property daia:edition from the SOBR proposal because FRBR relations are too strict. If you know a better relation to directly relate an abstract work to a concrete edition, please let me know.

image created with rdfdot

How to encode the availability of documents

23. Oktober 2009 um 12:50 2 KommentareSince almost a year I work on a simple encoding format and API to just get the current (!) availability status of documents in libraries. Together with Reh Uwe (hebis network) and Anne Christensen (beluga project) we created the Document Availability Information API (DAIA) which is defined as data model with encoding in XML and JSON (whichever you prefer).

This week I finished and published a reference implementation of the DAIA protocol as open source Perl-module at CPAN. The implementation includes a simple DAIA validator and converter. A public installation of this validator is also available. The next tasks include implementing server and client components for several ILS software. Every library has its own special rules and schemas – Jonathan Rochkind already wrote about the problems to implement DAIA because of ILS complexity. We cannot erase this complexity by magic (unless we refactor and clean the ILS), but at least we can try to map it to a common data model – which DAIA provides.

With the DAIA Perl package you can concentrate on writing wrappers from your library systems to DAIA and easily consume and evaluate DAIA-encoded information. Why should everyone write its own routines to grab for instance the HTML OPAC output and parse availability status? One mapping to DAIA should fit most needs, so others can build upon. DAIA can not only be helpful to connect different library systems, but also to create mashups and services like „Show me on a map, where a given book is currently hold and available“ or „Send me a tweet if a given books in my library is available again“ – If you have more cool ideas for client applications, just let me know!

In the context of ILS Discovery Interface Task Force and their official recommendation DAIA implements the GetAvailability method (section 6.3.1). There are numerous APIs for several tasks in library systems (SRU/SRW, Z39.50, OpenSearch, OAI-PMH, Atom, unAPI etc.) but there was no open, usable standard way just to query whether a copy of given publication – for instance book – is available in a library, in which department, whether you can loan it or only use it in the library, whether you can directly get it online, or how long it will probably take until it is available again (yes, I looked at alternatives like Z39.50, ISO 20775, NCIP, SLNP etc. but they were hardly defined, documented, implemented and usable freely on the Web). I hope that DAIA is easy enough so non-librarians can make use of it if libraries provide an API to their system with DAIA. Extensions to DAIA can be discussed for instance in Code4Lib Wiki but I’d prefer to start with this basic, predefined services:

- presentation: an item can be used inside the institution (in their rooms, in their intranet etc.).

- loan: an item can be used outside of the institution (by lending or online access).

- interloan: an tem can be used mediated by another institution. That means you do not have to interact with the institution that was queried for this item. This include interlibrary loan as well as public online ressources that are not hosted or made available by the queried institution.

- openaccess: an item can be used imediately without any restrictions by the institution, you don’t even have to give it back. This applies for Open Access publications and free copies.

European kind-of-Code4Lib conference

12. Februar 2009 um 16:01 Keine KommentareOn April 22-24th the European Library Automation Group Conference (ELAG 2009) will take place at the University Library in Bratislava. This is the 33rd ELAG and will be my first. I am happy to see that digital library conferences and developer meetups don’t only take place in the US, hopefully ELAG is like the Code4Lib Conference that takes place at February 23-26th this year for the 4th time – maybe we could have a joint Code4lib Europa / ECDL somtime in the next years? But first „meet us at ELAG 2009„!

Other library-related events in Germany that I will participate in the next month, include 10th Sun Summit Bibliotheken (March 18/19th in Kassel), ISI 2009 (April, 1-3rd in Konstanz), BibCamp 2009 (May, 15-17th in Stuttgart) and the annual Bibliothekartag (June, 2-5th in Erfurt). I am nore sure yet about the European Conference on Digital Libraries (ECDL 2009, September 27th – Oktober 2nd in Corfu, Greece) – the Call for Contributions is open until March 21st.

Quick overview of Open Access LIS journals

14. Oktober 2008 um 21:12 Keine KommentareI just stumbled upon the article „Evaluating E-Contents Beyond Impact Factor – A Pilot Study Selected Open Access Journals In Library And Information Science“ by Bhaskar Mukherjee (Journal of Electronic Publishing, vol. 10, no. 2, Spring 2007). It contains a detailed analysis of five Open Archive Journals in Library and Information Science, namely Ariadne, D-Lib Magazine, First Monday, Information Research, and Information Technology and Disabilities.

A more comprehensive list of OA journals in the LIS field can be found in the Directory of Open Access Journals (DOAJ). It currently lists 89 journals. Not all of them are highly relevant and lively so how do you compare? The traditional journal impact factor is oviously rubbish and most journals are not covered anyway. In a perfect world you could easily harvest all articles via OAI-PMH, extract all references via their identifiers and create a citation network of the Open Access world of library science – maybe you should also include some repositories like E-LIS. But maybe you can measure the impact in other ways. Why not including blogs? Instead of laboriously writing a full research paper for JASIST to „evaluate the suitability of weblogs for determining the impact of journals“ (ad-hoc title) I quickly used Google Blogsearch to count some links from weblog entries to some journal pages:

- First Monday

- 4,791

- D-Lib Magazine

- 2,366

- Ariadne

- 1,579

- Information Research

- 372

- Issues in Science & Technology Librarianship

- 249

- Code4Lib Journal

- 209

- Libres

- 61

- Journal of Library and Information Technology

- 10

I clearly admit that my method is insufficient: you first have to evaluate Google Blogsearch, check URL patterns, divide the number by age or by number of articles etc. Fortunately my blog is not peer-reviewed. But you can comment!

A note on German OA LIS journals: It’s a shame that while German librarians basically read German library journals only two of them are truly Open Access: Beside the more specialized GMS Medizin-Bibliothek-Information there is LIBREAS (with blog count 71). Its current CfP is on a special issue about Open Access and the humanities!

P.S: Why do so many LIS journals use insane and ugly URLs instead of clean and stable ones like http://JOURNALNAME.TDL/ISSUE/ARTICLE? This is like printing a journal on toilet paper! Apache rewrite, virtual hosts, and reverse proxies are there for a good reason.

P.P.S: The IFLA had a Section on Library and Information Science Journals 2002-2005.

Neueste Kommentare